Data center networking architectures are under a major transformation, and this large-scale change is linked closely to the explosive growth of AI. By 2025, AI workloads could account for nearly 30% of all data center traffic, exposing critical limitations in traditional data center networking architectures. Unlike conventional cloud applications that primarily use north-south traffic patterns, AI workloads generate massive east-west traffic between GPU clusters, demanding unprecedented bandwidth, near-zero latency, and adaptive infrastructure.

Recent surveys show that 53% of data center operators expect AI workloads to dominate their inter-facility bandwidth needs within just 2-3 years, surpassing even cloud computing demands. This radical shift is driving innovations across every layer of data center networking, from physical cabling to protocol stacks, while simultaneously creating new sustainability challenges that could reshape the entire industry .

This article focuses on how AI is transforming data center networking and its impact on the industry.

Before Chat GPT, AI Had Already Been Transforming the World

ChatGPT’s launch back in 2022 was quite an introduction, and soon after, everyone was trying it or at least talking about it. It was a sensational novelty, and its popularity has only increased ever since. New LLMs have appeared, and this is only the beginning of what AI can offer to the public.

However, as loud as chatbot releases have been, AI has been silently present for quite a while before the whole world learned about it. It has had an impact in many, if not all industries, independent of their size. It’s been the same with communication networks, which are intertwined with algorithms and AI-powered tools that help improve network speed and security.

Accurate statistics for 2025 are not yet available, however, there are hints suggesting that using AI to optimize data center networking could reduce latency by 20% and reduce the risk of security breaches by 30% very soon.

Data Center Transformation

Handling the unprecedented demands for processing power, speed, and efficiency requirements for AI pushes data centers to evolve and transform. Modern AI applications, and especially LLMs and deep learning systems, require specialized hardware configurations and create unique traffic patterns that challenge conventional architectures. This transformation affects every design layer in the data center from racks to cooling systems, but nowhere is the impact more profound than in data center networking architectures . As a result, AI has turned networking into a critical measure for differentiation in the market.

AI Networking Requirements

Unlike traditional cloud workloads that move data north-south between clients and servers, AI generates enormous east-west traffic between GPU clusters. This requires:

- Ultra-low latency (sub-microsecond) for synchronized distributed training.

- Massive bandwidth (800Gbps-1.6Tbps) to prevent bottlenecks

- Lossless transmission to avoid costly AI training interruptions

- Dynamic adaptability to handle unpredictable traffic spikes

However, AI isn’t just putting a strain on data centers, but also revolutionizing how they work.

AI Facilitating Operational Transformation

Thanks to AI, a number of innovations are facilitating data center networking. These are the most important ones:

- Predictive maintenance , which uses machine learning to anticipate hardware failures.

- Automated traffic engineering for dynamic route optimization in real time

- Intelligent cooling systems adjust based on thermal patterns and workload demands

- Security AI detects and mitigates threats faster than human teams

Even if accommodating the needs of AI workloads requires efforts and data center transformations , significant benefits also come with it. AI needs network efficiency, but AI can also improve network efficiency, so essentially, it can work on accommodating its own needs.

The Impact on Network Operations

Artificial Intelligence has evolved from experimental technology to a practical solution very fast, and today AI plays a role in transforming network operations. Telecom providers and infrastructure managers are adopting AI-powered systems to automate previously laborious tasks, achieving significant improvements in both operational performance and service reliability. Applications span from basic productivity enhancements, like virtual assistants using corporate knowledge bases, to sophisticated network management functions. Advanced AI systems now handle more important operations like bandwidth allocation, equipment failure prediction, and infrastructure optimization. AIs today process enormous datasets to identify and address potential problems. These advancements bring a double benefit: reducing dependence on manual monitoring, and enabling more precise, real-time operational decisions.

Easier Network Management

Industry leaders highlight how AI-driven automation is transforming data center networking infrastructure, helping providers to optimize performance and reduce costs. AI improves operational efficiency across entire networks, allowing telecom companies to virtualize infrastructure and scale services more effectively.

Applications continue to expand, like, for instance, AI-powered microservices developed by the Infosys-NVIDIA cooperation, which help operators create customized solutions from customer service chatbots to troubleshooting tools for network engineers. However, AI is more than a tool for improving efficiency. It’s reshaping how networks manage increasing device complexity and data traffic.

The Bandwidth Challenge: Data Center Networking Meeting AI’s Demands

The bandwidth requirements alone are staggering. Modern AI training clusters routinely require 800 Gbps to 1.6Tbps connections between nodes, with latency thresholds measured in nanoseconds rather than milliseconds. Traditional three-tier network architectures simply can’t handle these demands, forcing operators to adopt new approaches.

- Clos fabric topologies that provide non-blocking connectivity between thousands of GPUs

- Ultra-low latency protocols like RoCEv2 and NVIDIA’s Quantum-2 InfiniBand

- Advanced congestion control mechanisms to prevent packet drops during distributed training

- Optical networking innovations that push the physical limits of data transmission

A Rapid Transformation

Recent industry surveys reveal just how rapidly this transformation is occurring. The 2024 Ciena Global Data Center Networking Report found that 87% of operations will need 8 Gb/s or faster wavelengths for data center interconnects by 2030, with 43% of new facility construction specifically dedicated to AI workloads. Perhaps most strikingly, 81% of respondents expect large language model training to routinely span multiple physical facilities, creating unprecedented demands for high-performance, long-distance networking. These changes are driving massive investments in new technologies, with 98% of operators prioritizing pluggable optics for their power efficiency and density advantages over traditional fixed transceivers.

Sustainability Concerns

Sustainability has emerged as perhaps the most pressing secondary challenge in this AI networking revolution. Current projections suggest AI data centers could consume 134 TWh annually by 2027, equivalent to the entire energy consumption of a mid-sized European country. The networking infrastructure alone accounts for a significant portion of this demand, particularly for long-haul connections between distributed training clusters. Operators are responding with a mix of short-term fixes and long-term architectural changes. Gradually, scaling sustainably is becoming a significant focus in the industry.

These are some of the most significant areas of innovation:

Pluggable Optics

Pluggable optics are modular transceivers that enable high-speed data transmission in data center networking. These allow easy upgrades and scalability because they can be inserted and removed from servers, routers, or switches. Supporting various speeds and fiber types, these transceivers optimize network performance and reduce costs. Because they enhance energy efficiency and minimize space usage, they are ideal for AI workloads with massive bandwidth. The new 800G OSFP and coherent pluggables play a critical role in meeting the growing demands in today’s data center networking.

Data center industry experts agree that he pluggable optics revolution deserves special attention as perhaps the most impactful near-term innovation. Modern 800G OSFP modules provide numerous advantages over traditional fixed optics.

- 40% lower cost per gigabit compared to previous generations

- 50% power savings through advanced DSP and modulation techniques

- Hot-swappable flexibility that simplifies upgrades and maintenance

- Denser faceplate utilization to handle growing port counts

Among other benefits, the technology is important for reducing power consumption and assisting data center consolidation efforts. Besides reducing the facility’s footprint, consolidation also has a great impact on power efficiency and network infrastructure scaling.

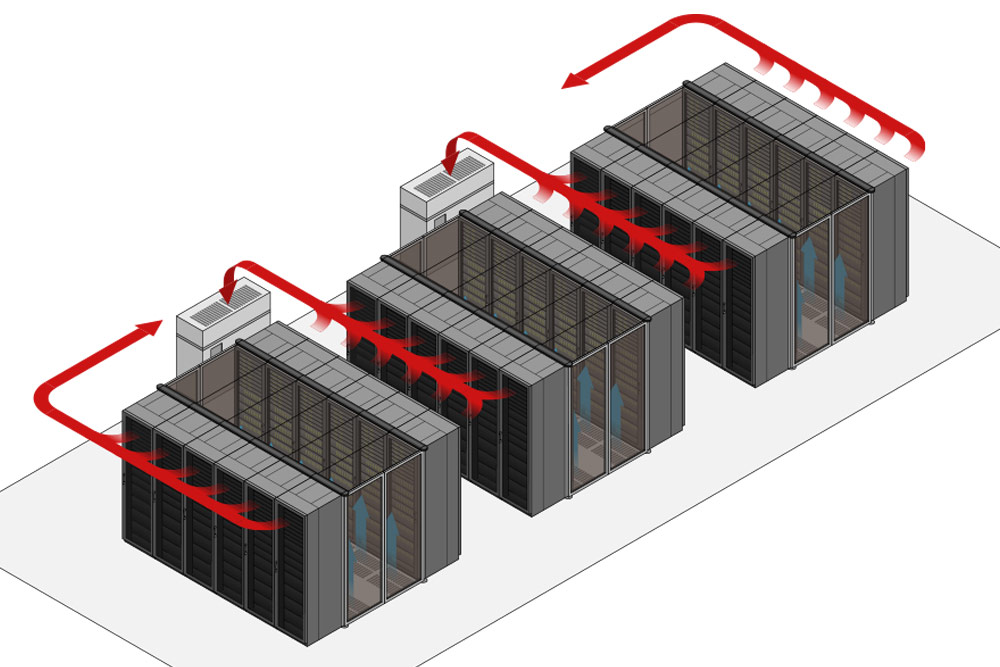

Liquid Cooling

Liquid cooling systems have become essential for high-density AI racks, reducing cooling energy consumption by up to 90% compared to traditional air cooling. Some hyperscalers are even experimenting with nuclear-powered data centers, with Talen Energy recently announcing a 960MW campus specifically designed for AI workloads. At the physical layer, single-mode fiber has become the undisputed backbone for AI networking, though hollow-core fiber prototypes promise to cut latency in half, which is great news, especially for sensitive applications.

Looking Ahead: What’s Needed for Data Center Networking With AI

The requirements of AI for data center networking will likely continue to outpace conventional improvements in silicon and fiber optics. High bandwidth is essential for AI, however, it’s not the only thing that’s needed.

AI affects data center networking requirements and changes traffic patterns. After a certain point, it’s just not enough to balance network capacity by simply adding more hardware. Smart networks, like multi-layer automation, capable of adapting to new demands in real time, will become necessary to dynamically add bandwidth, avoid congestion, and improve power use patterns. To complement automated frameworks, network slicing is used, which allows optimization and customization on each slice. These practices enable more efficient and flexible data center networking.

Beyond raw capacity, next-generation networks must be smarter, more adaptive, and energy efficient to keep up with changing needs.

The Role of AI in Predictive Maintenance and Network Resilience

One of the most transformative impacts of AI on data center networking is its ability to predict and prevent failures before they occur. Traditional network management relies on reactive troubleshooting – teams respond to outages , latency spikes, or hardware malfunctions only after they have already disrupted operations. AI can change this by enabling predictive maintenance, where machine learning models analyze incredibly vast telemetry data streams: temperature fluctuations, packet loss trends, and switch performance metrics. By analyzing these, it becomes possible to identify early warning signs of potential failures.

For example, AI can detect a cooling system’s gradual efficiency decline or a fiber optic cable’s signal degradation long before it causes downtime . Flagging these issues helps data center operators to schedule maintenance earlier to fix issues. This minimizes disruptions and extends hardware lifespans.

Network Resilience

Apart from hardware, AI also enhances network resilience by autonomously mitigating cyber threats and traffic anomalies. Unlike rule-based security systems, AI-driven solutions (like Cisco’s Encrypted Traffic Analytics or Juniper’s Mist AI) use behavioral analysis to detect zero-day attacks , DDoS attempts, or insider threats in encrypted traffic without decrypting data. Also, AI-powered self-healing networks can automatically reroute traffic around failed nodes or congested paths and maintain SLAs even during unexpected surges. This is particularly critical for AI/ML workloads, where intermittent latency can derail distributed training jobs.

Testing and Optimization

AI’s role in network automation will expand from operational efficiency to more important areas, like decision-making. New tools allow operators to test and optimize in a risk-free environment before deployment. Meanwhile, genAI is starting to assist in network design, proposing configurations based on performance data. As these technologies mature, AI is morphing into fulfilling its ultimate role: becoming the central nervous system of data center networking.

AI-Optimized Data Center Networking Best Practices

Multi-Layer Automation for Dynamic Adaptation

Because AI workloads require networks that can self-adjust in real time, static, hardware-centric scaling is no longer sufficient. Instead, multi-layer automation – spanning physical, virtual, and software-defined layers – enables networks to dynamically allocate bandwidth, prevent congestion, and optimize power usage. AI-driven automation can predict traffic surges and reroute data flows before bottlenecks occur.

Network Slicing for Customized Workloads

Network slicing allows operators to partition a single physical network into multiple virtual segments, each optimized for specific AI tasks (for example, training vs inference). This ensures low-latency paths for time-sensitive workloads while dedicating high-throughput lanes for data-intensive operations. Tailoring each slice to its workload makes it possible for data centers to maximize efficiency and reduce resource contention.

Intelligent Traffic Engineering

Traditional load-balancing methods struggle with AI’s irregular traffic patterns. AI-powered traffic engineering uses machine learning to analyze flow dynamics and optimize routing decisions in real time. Techniques like adaptive flow scheduling and congestion-aware routing help minimize latency and packet loss and improve overall network resilience.

Energy-Management

AI data center networking consumes massive amounts of power. Smart power management, like dynamic voltage scaling and workload-aware cooling, can reduce energy waste. Additionally, emerging technologies like co-packaged optics (CPO) and silicon photonics lower power consumption and, at the same time, increase bandwidth density.

The Future of AI-Ready Networks

Vendors are moving toward disaggregated data center networking architectures, where switches, NICs, and accelerators operate as independent, programmable units. This approach allows faster upgrades and better scalability, which are crucial for keeping up with AI’s fast hardware demands.

As AI models grow, the boundary between computing and networking becomes blurred. In-network computing ( for example, processing data within switches) and near-memory processing reduce data movement, cutting latency and power usage . Companies like NVIDIA (with DPUs) and Intel (with IPUs) are already driving this shift.

6 G and Advanced Optics

Future AI clusters may leverage 6G wireless backhaul and terabit-scale optical interconnects to handle extreme bandwidth needs. Research into quantum networking and photonics-based AI accelerators could further revolutionize data center scalability.

Self-Optimizing Networks

The future of AI-driven data center networking hinges on things like smart automation, adaptive architectures, and energy-efficient innovations. Simply adding more hardware is no longer viable. Instead, networks will foreseeably evolve into self-optimizing ecosystems capable of anticipating demands.

Conclusion

The message for the future of data center networking is clear: incremental upgrades won’t be enough. “Surviving” the AI revolution will require a comprehensive rethinking of network architectures, power systems, and physical layer technologies. Those who fail to adapt risk being left behind as AI reshapes not just what we compute but how we connect computing resources. With 75% of new data centers expected to be AI-optimized by 2026, the future belongs to networks that can simultaneously deliver massive bandwidth, low latency, and sustainable operations. These will define the next era of data center networking.